Using AI tooling for unit testing in JetBrains Rider

Introduction

I used Visual Studio and GitHub Copilot in the last blog post to generate unit test code. Many developers use Visual Studio, but JetBrains Rider is also used by many. I use JetBrains Rider a lot, especially when working on my MacBook. In this blog post, I will show you what the new code-generation AI features (AI Assistant) of JetBrains Rider can do for you as a developer. I used the 2023.2 version on a MacBook Pro M1 for this post. The AI tooling is still in preview and not available to the general public. The pricing of the AI plugin is also not known at this time.

Example

The example for this blog post is the same as the example for the blog post about Visual Studio and GitHub Copilot. To refresh your memory, or if you have not read the previous blog post, here is a small summary of our test case. For this article, I use a probably well-known example of an order with orderlineitems.

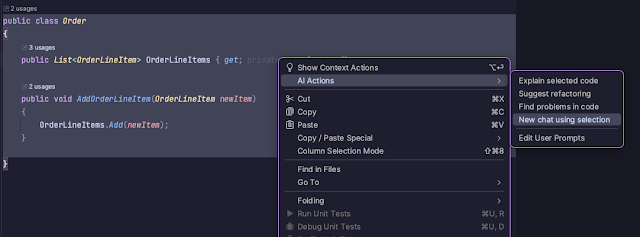

Just as in the previous blog post, I asked the AI tooling to generate a property to calculate the total price. Therefore I added a chat to the code of the order.

In the new chat window, I asked Rider to generate a property to return the total order sum.

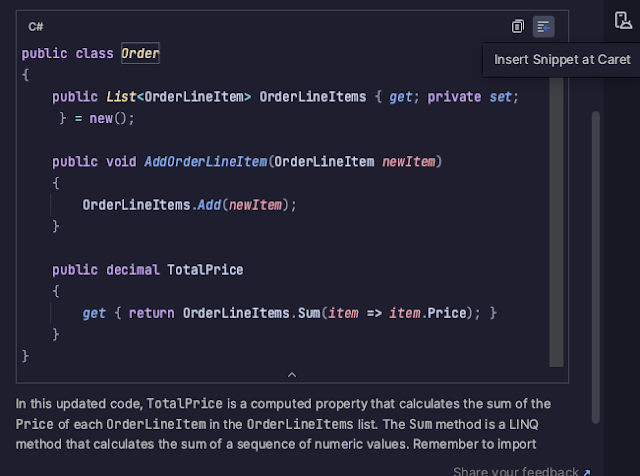

The following code got generated by the AI assistant.

By using the "|nsert Snippet at Caret" the generated code can be copied into the code of the project. Rider does not offer the option to insert just the new code at this time. For our example, it is not a big problem to replace the entire code, but I would not want to do this if the code contains 100 lines or more.

Together with the code, an explanation for the generated code is also generated.

I personally don't like the generated code at this time. I would want to use the expression-bodied syntax for this example. I therefore ask the AI assistant to refactor the code.

The refactoring does generate the code I was looking for in the first place.

Adding first tests

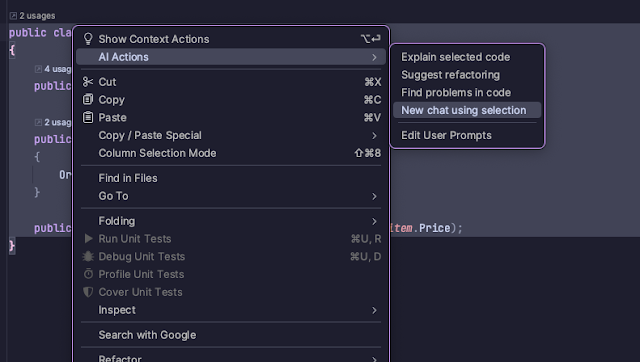

The code now offers some logic to generate unit tests by launching a new chat based on the entire code for Order.

And using the following prompt.

The AI assistant does indicate that the code will be generated based on xUnit and FluentAssertions. I am in favour of using FluentAssertions in test code, but I did not ask the AI to use this component.

For the code only 2 test cases are generated.

The AI assistant also generated a new definition for the OrderLineItem class.

I assume that most .NET developers are not familiar with FluentAssertions, so I ask AI assistant to change the code.

I inserted this generated code into a new test project and removed the generated OrderLineItem class.

The generated code does compile on the first try and even runs correctly.

Just as I did in the blog post about GitHub Copilot, I asked AI assistant to generate a unit test for an empty list.

This does the job and also runs successfully.

Adding the discount

AI Assistant does not work directly in the editor at this moment, so in order to change the code to take discounts into account, I have to use an AI chat window.

And ask the AI to change the code.

The following code for the discount is generated.

The logic in the getter is correct so that only one discount percentage is applied. The else-parts could have been omitted. Taking code readability into account, I don't mind the extra code.

But, since this blog post is mostly about unit testing with AI, we do have to generate a unit test for this property using the following prompt.

The AI assistant did understand what I wanted because it replied with the following text.

The boundary values are correctly identified. The assistant generated a number of test cases for me.

The test cases for the boundary value and the value just above the boundary value are all generated. The unit tests for just below the boundary value were promised, but not generated. I asked AI assistant to generate them.

Now the missing test cases are generated.

I still am not satisfied with the coverage of the entire range. I ask AI Assistant to generate some more test cases.

The assistant gives me exactly what I am looking for.

I do have to fix some code generation flaws like LineItem, but all in all, I am quite satisfied with the generated tests.

Conclusion

I am quite pleased with the quality of the generated code. Of course, tuning the generated code does take knowledge and experience from the developer. AI assistant better understands some theoretical aspects of unit testing compared to GitHub Copilot and can generate unit tests based on this knowledge. At the time of this writing, AI assistant is still in preview. I hope that JetBrains enhances AI assistant with more context about the current code base. That would make the generated code more usable without the need for code changes by the developer.

Comments

Post a Comment